Google Search is able to understand human language using multiple AI models that all work together to find the most relevant results.

Information on how these AI models work is explained in simple terms by Pandu Nayak, VP of Google Search, in a new post on the company’s official blog.

Nayak demystifies the following AI models, which play a major role in how Google returns search results:

- RankBrain

- Neural pairing

- BERT

- MOM

None of these models work alone. They all help each other by performing different tasks to understand queries and match them to the content searchers are looking for.

Here are the key takeaways from Google’s behind-the-scenes look at what its AI models are doing and how it all translates into better results for searchers.

Google’s AI models explained

RankBrain

Google’s first AI system, RankBrain, was launched in 2015.

As the name suggests, the purpose of RankBrain is to determine the best order for search results by ranking them according to relevance.

Although it was Google’s first deep learning model, RankBrain continues to play a major role in search results today.

RankBrain helps Google understand how words in a search query relate to real-world concepts.

Nayak illustrates how RankBrain works:

“For example, if you search for ‘what is the title of the consumer at the highest level of a food chain’, our systems learn by seeing these words on different pages that the concept of a food chain may have to do with animals, and not human consumers.

By understanding and matching these words to their associated concepts, RankBrain understands that you are looking for what is commonly referred to as an “apex predator”.

Neural correspondence

Google introduced neural matching in search results in 2018.

Neural matching allows Google to understand how queries relate to pages using knowledge of broader concepts.

Rather than looking at individual keywords, Neural Match examines queries and entire pages to identify the concepts they represent.

With this AI model, Google is able to cast a wider net when we scan its index for content relevant to a query.

Nayak illustrates how neural correspondence works:

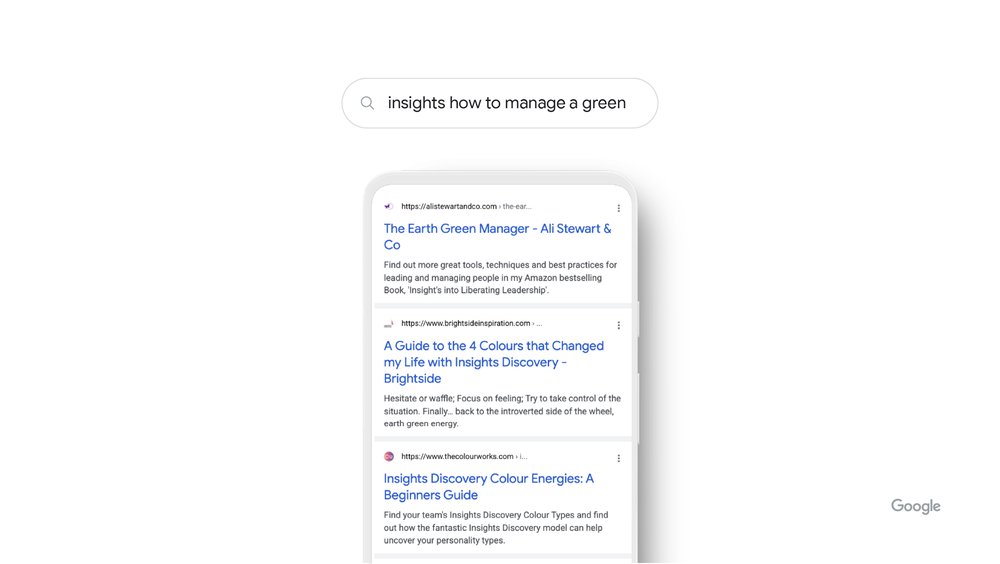

“Take the search ‘insights how to manage a green’, for example. If a friend asked you that, you’d probably be puzzled. But with neural matching, we’re able to make sense of it.

By examining the broader representations of the concepts in the query – management, leadership, personality and more – Neural Match can decipher that this researcher is looking for management advice based on a popular color-based personality guide.

Screenshot from blog.google/products/search/, February 2022

Screenshot from blog.google/products/search/, February 2022

BERT

BERT was first introduced in 2019 and is now used in all queries.

It is designed to accomplish two things: retrieve relevant content and rank it.

BERT can understand how words relate to each other when used in a particular sequence, ensuring important words aren’t missed in a query.

This complex understanding of the language allows BERT to rank web content for relevance faster than other AI models.

Nayak illustrates how BERT works in practice:

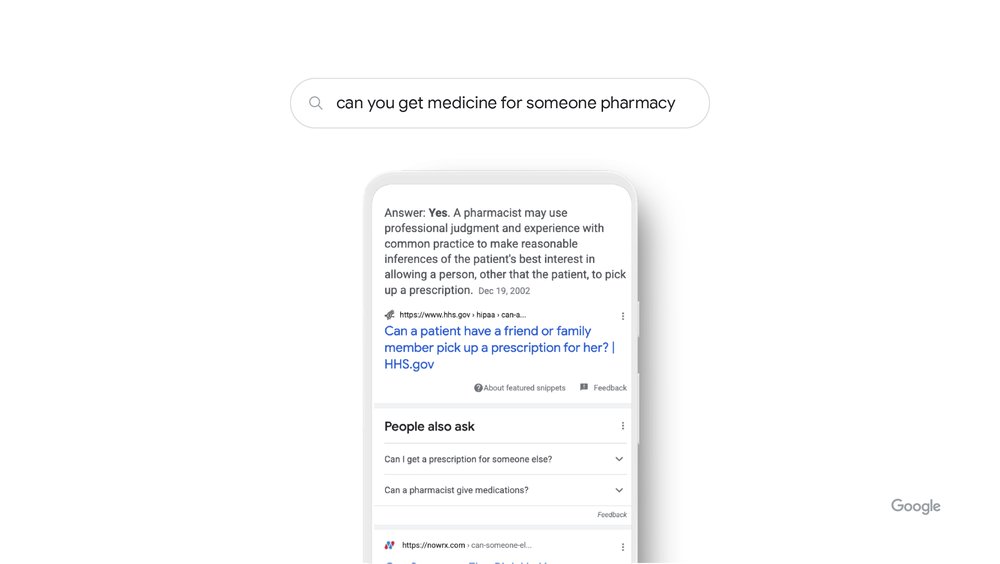

“For example, if you search for ‘can you get medicine for someone pharmacy’, BERT understands that you are trying to figure out if you can get medicine for someone else.

Before BERT, we took this short preposition for granted, mostly sharing results on how to fill a prescription. Thanks to BERT, we understand that even small words can have big meanings. »

Screenshot from blog.google/products/search/, February 2022

Screenshot from blog.google/products/search/, February 2022

MOM

Google’s latest AI milestone in search – the Unified Multitasking Model, or MUM, was introduced in 2021.

MUM is a thousand times more powerful than BERT and capable of both understanding and generating language.

He has a more complete understanding of information and knowledge of the world, being trained in 75 languages and many different tasks at once.

MUM’s understanding of language extends to images, text, and more in the future. That’s what it means when you hear MUM referred to as “multimodal”.

Google is in the early days of realizing the potential of MUM, so its use in search is limited.

Currently, MUM is used to enhance searches for COVID-19 vaccine information. In the coming months, it will be used in Google Lens as a means of searching using a combination of text and images.

Summary

Here’s a rundown of what Google’s main AI systems are and what they do:

- RankBrain: Ranks content by understanding how keywords relate to real-world concepts.

- Neural pairing: Gives Google a broader understanding of concepts, which increases the amount of content Google can search.

- BERT: allows Google to understand how words can change the meaning of queries when used in a particular sequence.

- MOM: Understands the world’s information and knowledge in dozens of languages and multiple modalities, such as text and images.

These AI systems all work together to find and rank the most relevant content for a query as quickly as possible.

Source: google

Feature image: Igor Golovniov/Shutterstock