The World Wide Web is on the eve of a new stage of development called Web 3. This revolutionary concept of online interaction will require an overhaul of the entire Internet infrastructure, including search engines. How does a decentralized search engine work and how does it fundamentally differ from current search engines like Google? As an example, let’s look at a decentralized search engine for Web3 that was created by the Cyber project.

What’s wrong with Google?

Google is the most used search engine in the world. It accounts for around 80% of global search queries, but is often criticized for its opaque way of indexing links and generating search results. Although descriptions of much of the technology related to its search algorithm have been published and are publicly available, it doesn’t change much for an end user trying to figure out how it works: the number of parameters taken account for producing results is so large that Google’s search algorithm simply appears as a black box.

In practice, ordinary users face two fundamental problems. First, two different users performing the exact same query will often receive drastically different search results. Indeed, Google has managed to collect a treasure trove of data about its users and adjusts its search results based on the information it has about them. It also takes into account many other parameters, including location, previous user requests, local legislation, etc. Secondly, and this is the main complaint that we often hear about Google, the mechanism for indexing links is not clear to users: why a piece of content is ranked as highly relevant for a given query , while another appears well below the top twenty search results, which contain much more content directly applicable to that query?

Finally, the architecture of any search engine designed for Web2 – be it Google, Yandex, Bing or Baidu – works with protocols such as TCP/IP, DNS, URL and HTTP/S, which means that it uses addressed locations. or URL links. The user enters a query into the search bar, receives a list of hyperlinks to third-party sites where the relevant content is located, and clicks on one of them. Then, the browser redirects them to a well-defined physical address of a network server, that is to say an IP address. What’s wrong with that? In fact, this approach creates a lot of problems. First, this type of ploy can often make content inaccessible. For example, a hyperlink may be blocked by local authorities, not to protect the public from harmful or dangerous content, but for political reasons. Secondly, hyperlinks make it possible to falsify content, that is to say to replace it. Content on the web is currently extremely vulnerable, as it can change, disappear or be blocked at any time.

Web 3 represents a completely new stage of development in which work with web content will be organized in a completely different way. The content is addressed by the hash of the content itself, which means that the content cannot be modified without changing its hash. With this approach, it is easier to find content in a P2P network without knowing its specific storage location i.e. server location. Although not immediately obvious, it offers a huge advantage that will be extremely important in everyday Internet use: the possibility of exchanging permanent links that will not break over time. There are other advantages like copyright protection, for example, because it will no longer be possible to repost content a thousand times on different sites, because the sites themselves will no longer be necessary as they are are currently. The link to the original content will remain the same forever.

Why is a new search engine needed for Web3?

Existing global search engines are centralized databases with limited access that everyone should trust. These search engines were developed primarily for Web 2 client-server architectures.

In the content-oriented Web3, the search engine loses its unique power over the search results: this power will be in the hands of the participants in the peer-to-peer network, who will decide themselves the ranking of the cyberlinks (the link between the content and not the link to the IP address or domain). This approach changes the rules of the game: there is no more arbitrary Google with its opaque link indexing algorithms, no more need for crawler bots that collect information on possible content changes on the sites, no more risk to be censored or to become a victim of a privacy breach.

How does a Web 3 search engine work?

Let’s take the example of the architecture of a search engine designed for Web 3 using the Cyber protocol. Unlike other search engines, Cyber was designed to interact with the World Wide Web in a new way from the start..

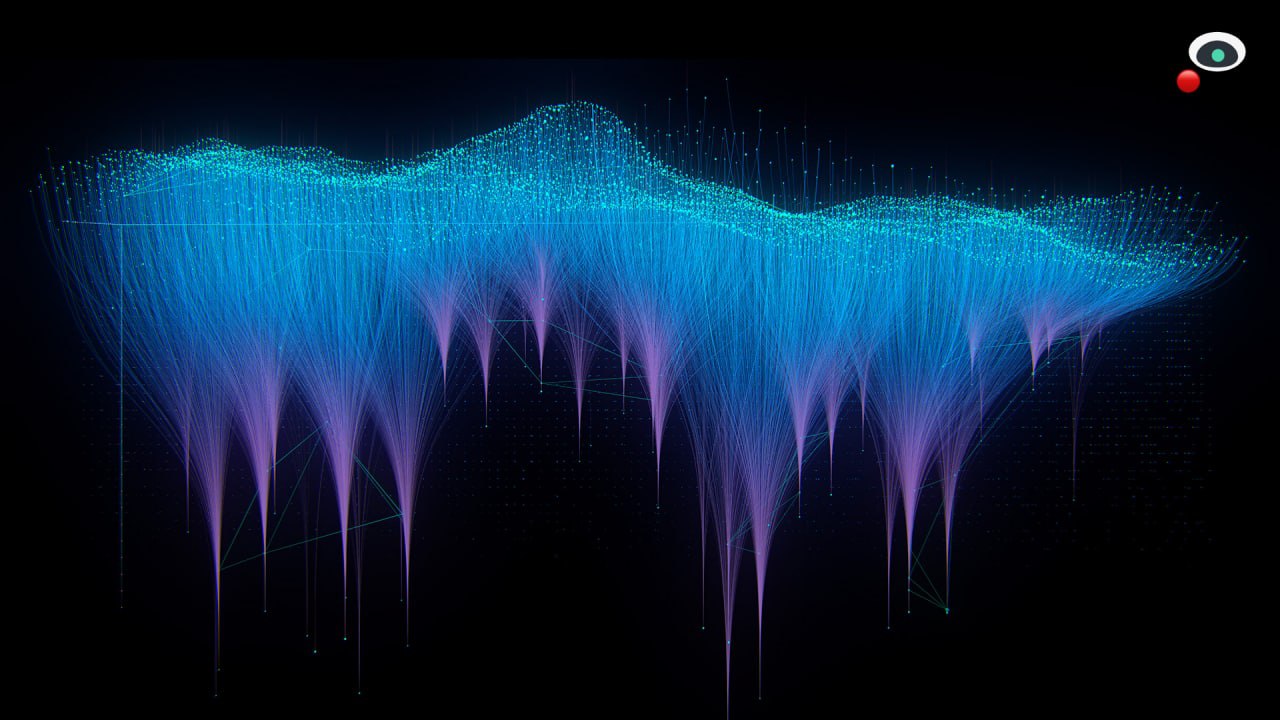

A decentralized search engine differs from centralized search engines like Google because, with Web 3 search engines, links to content are organized in a knowledge graph in which peer participants exchange information without being tied to centralized nodes . Users find the desired content through its hash, which is stored by another member of the network. Once the content is found and downloaded, the user becomes one of its distribution points. This pattern of operation resembles that of torrent networks, which provide reliable storage, resist censorship, and also help organize access to content in the absence of a good or direct Internet connection.

To add content to the knowledge graph in the Cyber protocol, it is necessary to perform a transaction with a cyberlink. This is similar to the payload field in an Ethereum transaction, except that the data is structured. The transaction is then validated by the Tendermint consensus, and the cyberlink is included in the knowledge graph. Every few blocks, Cyber recalculates the rank of all content in the Knowledge Graph based on a certain formula called cyberRank. Like PageRank, the new algorithm ranks content dynamically, but, at the same time, ensures that the knowledge graph is protected against spam, cyber-attacks and selfish user behavior via an economical mechanism.

The users and validators of Cyber’s decentralized search engine form a supercomputer. Cyber’s ability to calculate rankings in the Knowledge Graph exceeds existing CPU blockchain computers by orders of magnitude, as its calculations are well parallelized and performed on a GPU. Therefore, any cyberlink becomes part of the knowledge graph almost instantly and is classified within a minute. Even paid advertising in AdWords cannot provide such speed, not to mention the good old organic search engines, where indexing sometimes has to wait months.

Ranking in a decentralized search engine for Web 3

Cyber’s base is called Content Oracle. It is a dynamic, collaborative and distributed knowledge graph that is formed by the work of all participants in a decentralized network.

One of the main tasks faced by the developers of a decentralized search engine is to design the mechanisms that rank the links. In the case of a Web3 search engine, it is a cyberlink to relevant content. In the Cyber protocol, this is implemented via tokenomics.

At the heart of tokenomics is the idea that users should be interested in the long-term success of Superintelligence. Therefore, in order to get tokens that will index the content V (volts) and classify it as A (amps), it is necessary to get an H (hydrogen) token for a certain period. H, in turn, is produced by staking the mainnet token (BOOT for Bostrom and CYB for Cyber). Thus, cyber users will be able to access knowledge graph resources with a network token and receive staking revenue similar to Polkadot, Cosmos or Solana.

It’s true. The ranking of cyberlinks linked to an account depends on the number of tokens. But if the tokens have such an impact on the search result, who will they belong to in the first place? Seventy percent of Genesis tokens will be offered to users of Ethereum and its applications, as well as users of the Cosmos network. The reduction will be made on the basis of an in-depth analysis of the activities of these networks. Most of the bet will therefore go to users who have proven their ability to create value. Cyber believes this approach will lay the groundwork for the semantic core of the Great Web, which will help civilization overcome the difficulties it has encountered.

What will an ordinary user see in a decentralized search engine?

Visually, search results in the Cyber Protocol will differ little from the usual centralized search format. But there are several key benefits:

- The search results include the desired content, which can be read or viewed directly in the search results without going through another page.

- Buttons for interacting with apps on any blockchain and making payments to online stores can be embedded directly into search snippets.

How is the Cyber protocol tested?

Cyb.ai is an experimental browser-within-a-browser prototype. With its help, you can search content, surf content using built-in ipfs node, index content and most importantly interact with decentralized applications. At the moment, Cyb is connected to a testnet, but, after the launch of the Bostrom canary network on November 5, it will be possible to participate in the incredible Superintelligence bootstrapping process with the help of Cyb.